SpinKube documentation

Everything you need to know about SpinKube.

First steps

To get started with SpinKube, follow our Quickstart guide.

How the documentation is organized

SpinKube has a lot of documentation. A high-level overview of how it’s organized will help you know

where to look for certain things.

- Installation guides cover how to install SpinKube on various

platforms.

- Topic guides discuss key topics and concepts at a fairly high level and

provide useful background information and explanation.

- Reference guides contain technical reference for APIs and other

aspects of SpinKube’s machinery. They describe how it works and how to use it but assume that you

have a basic understanding of key concepts.

- Contributing guides show how to contribute to the SpinKube project.

- Miscellaneous guides cover topics that don’t fit neatly into either of

the above categories.

1 - Overview

A high level overview of the SpinKube sub-projects.

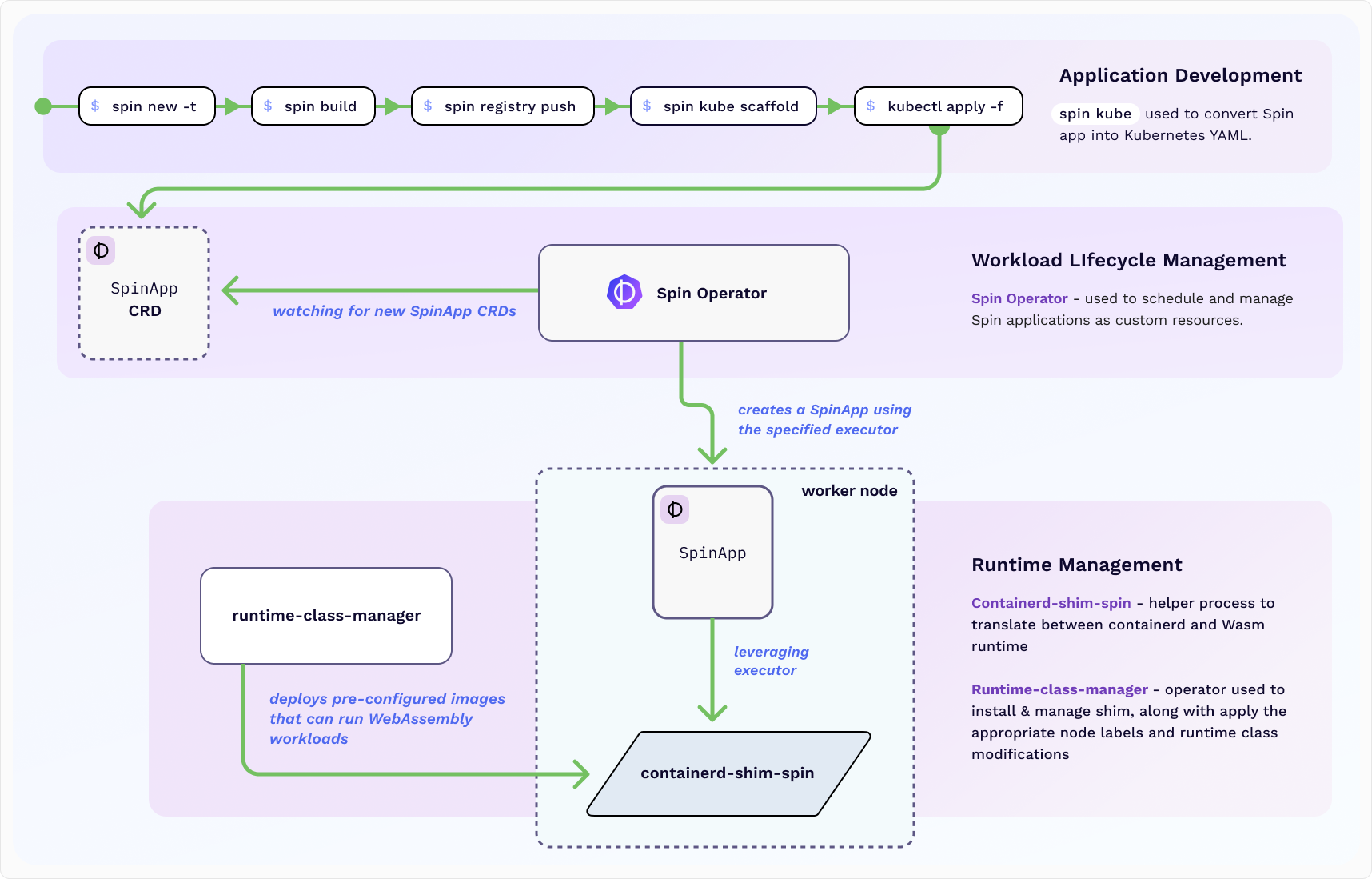

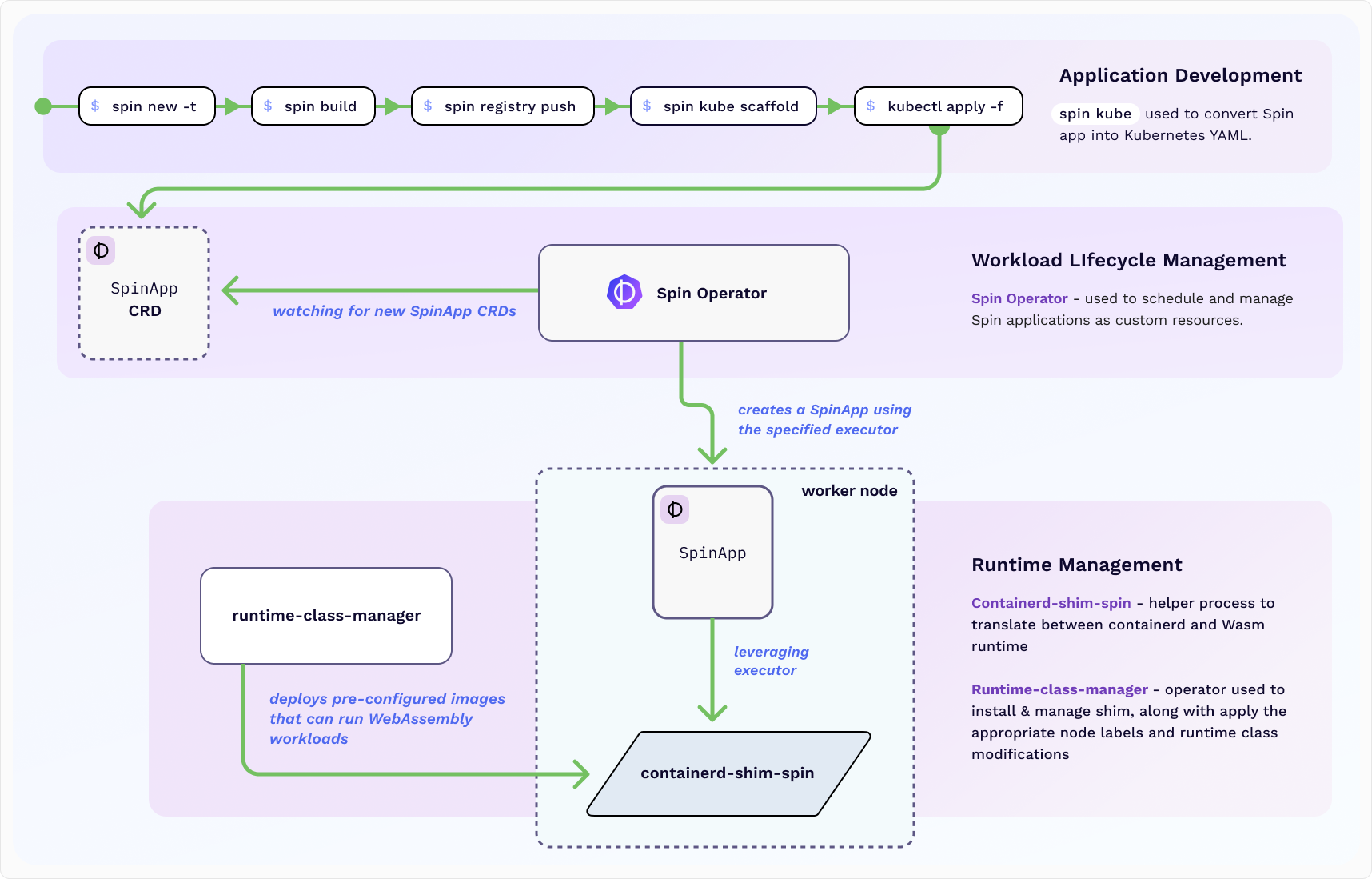

Project Overview

SpinKube is an open source project that streamlines the

experience of deploying and operating Wasm workloads on Kubernetes, using Spin

Operator in tandem with

runwasi and runtime class

manager.

With SpinKube, you can leverage the advantages of using WebAssembly (Wasm) for your workloads:

- Artifacts are significantly smaller in size compared to container images.

- Artifacts can be quickly fetched over the network and started much faster (*Note: We are aware of

several optimizations that still need to be implemented to enhance the startup time for

workloads).

- Substantially fewer resources are required during idle times.

Thanks to SpinKube, we can do all of this while integrating with Kubernetes primitives including

DNS, probes, autoscaling, metrics, and many more cloud native and CNCF projects.

SpinKube watches Spin App Custom Resources and realizes

the desired state in the Kubernetes cluster. The foundation of this project was built using the

kubebuilder framework and contains a Spin App

Custom Resource Definition (CRD) and controller.

SpinKube is a Cloud Native Computing Foundation sandbox project.

To get started, check out our Quickstart guide.

2 - Installation

Before you can use SpinKube, you’ll need to get it installed. We have several complete installation guides that covers all the possibilities; these guides will guide you through the process of installing SpinKube on your Kubernetes cluster.

2.1 - Quickstart

Learn how to setup a Kubernetes cluser, install SpinKube and run your first Spin App.

This Quickstart guide demonstrates how to set up a new Kubernetes cluster, install the SpinKube and

deploy your first Spin application.

Prerequisites

For this Quickstart guide, you will need:

- kubectl - the Kubernetes CLI

- Rancher Desktop or Docker

Desktop for managing containers and Kubernetes on your

desktop

- k3d - a lightweight Kubernetes distribution

that runs on Docker

- Helm - the package manager for Kubernetes

Set up Your Kubernetes Cluster

- Create a Kubernetes cluster with a k3d image that includes the

containerd-shim-spin prerequisite already

installed:

k3d cluster create wasm-cluster \

--image ghcr.io/spinframework/containerd-shim-spin/k3d:v0.22.0 \

--port "8081:80@loadbalancer" \

--agents 2

Note: Spin Operator requires a few Kubernetes resources that are installed globally to the

cluster. We create these directly through kubectl as a best practice, since their lifetimes are

usually managed separately from a given Spin Operator installation.

- Install cert-manager

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.14.3/cert-manager.yaml

kubectl wait --for=condition=available --timeout=300s deployment/cert-manager-webhook -n cert-manager

- Apply the Runtime

Class

used for scheduling Spin apps onto nodes running the shim:

Note: In a production cluster you likely want to customize the Runtime Class with a nodeSelector

that matches nodes that have the shim installed. However, in the K3d example, they’re installed on

every node.

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.runtime-class.yaml

- Apply the Custom Resource Definitions

used by the Spin Operator:

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.crds.yaml

Deploy the Spin Operator

Execute the following command to install the Spin Operator on the K3d cluster using Helm. This will

create all of the Kubernetes resources required by Spin Operator under the Kubernetes namespace

spin-operator. It may take a moment for the installation to complete as dependencies are installed

and pods are spinning up.

# Install Spin Operator with Helm

helm install spin-operator \

--namespace spin-operator \

--create-namespace \

--version 0.6.1 \

--wait \

oci://ghcr.io/spinframework/charts/spin-operator

Lastly, create the shim executor:

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.shim-executor.yaml

Run the Sample Application

You are now ready to deploy Spin applications onto the cluster!

- Create your first application in the same

spin-operator namespace that the operator is running:

kubectl apply -f https://raw.githubusercontent.com/spinframework/spin-operator/main/config/samples/simple.yaml

- Forward a local port to the application pod so that it can be reached:

kubectl port-forward svc/simple-spinapp 8083:80

- In a different terminal window, make a request to the application:

curl localhost:8083/hello

You should see:

Next Steps

Congrats on deploying your first SpinApp! Recommended next steps:

2.2 - Installing the `spin kube` plugin

Learn how to install the kube plugin.

The kube plugin for spin (The Spin CLI) provides first class experience for working with Spin

apps in the context of Kubernetes.

Prerequisites

Ensure you have the Spin CLI (version 2.3.1 or

newer) installed on your machine.

Install the plugin

Before you install the plugin, you should fetch the list of latest Spin plugins from the

spin-plugins repository:

# Update the list of latest Spin plugins

spin plugins update

Plugin information updated successfully

Go ahead and install the kube using spin plugin install:

# Install the latest kube plugin

spin plugins install kube

At this point you should see the kube plugin when querying the list of installed Spin plugins:

# List all installed Spin plugins

spin plugins list --installed

cloud 0.7.0 [installed]

cloud-gpu 0.1.0 [installed]

kube 0.1.1 [installed]

pluginify 0.6.1 [installed]

Compiling from source

As an alternative to the plugin manager, you can download and manually install the plugin. Manual

installation is commonly used to test in-flight changes. For a user, installing the plugin using

Spin’s plugin manager is better.

Please refer to the spin-plugin-kube GitHub

repository for instructions on how to compile the

plugin from source.

2.3 - Installing with Helm

This guide walks you through the process of installing SpinKube using

Helm.

Prerequisites

For this guide in particular, you will need:

- kubectl - the Kubernetes CLI

- Helm - the package manager for Kubernetes

Install Spin Operator With Helm

The following instructions are for installing Spin Operator using a Helm chart (using helm install).

Prepare the Cluster

Before installing the chart, you’ll need to ensure the following are installed:

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.14.5/cert-manager.yaml

- Kwasm Operator is required to install WebAssembly shims

on Kubernetes nodes that don’t already include them. Note that in the future this will be replaced

by runtime class manager.

# Add Helm repository if not already done

helm repo add kwasm http://kwasm.sh/kwasm-operator/

# Install KWasm operator

helm install \

kwasm-operator kwasm/kwasm-operator \

--namespace kwasm \

--create-namespace \

--set kwasmOperator.installerImage=ghcr.io/spinframework/containerd-shim-spin/node-installer:v0.22.0

# Provision Nodes

kubectl annotate node --all kwasm.sh/kwasm-node=true

Chart prerequisites

Now we have our dependencies installed, we can start installing the operator. This involves a couple

of steps that allow for further customization of Spin Applications in the cluster over time, but

here we install the defaults.

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.crds.yaml

- Next we create a RuntimeClass that points to the

spin

handler called wasmtime-spin-v2. If you are deploying to a production cluster that only has a shim

on a subset of nodes, you’ll need to modify the RuntimeClass with a nodeSelector::

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.runtime-class.yaml

- Finally, we create a

containerd-spin-shim SpinAppExecutor. This tells the Spin Operator to use the RuntimeClass we

just created to run Spin Apps:

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.shim-executor.yaml

Installing the Spin Operator Chart

The following installs the chart with the release name spin-operator:

# Install Spin Operator with Helm

helm install spin-operator \

--namespace spin-operator \

--create-namespace \

--version 0.6.1 \

--wait \

oci://ghcr.io/spinframework/charts/spin-operator

Upgrading the Chart

Note that you may also need to upgrade the spin-operator CRDs in tandem with upgrading the Helm

release:

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.crds.yaml

To upgrade the spin-operator release, run the following:

# Upgrade Spin Operator using Helm

helm upgrade spin-operator \

--namespace spin-operator \

--version 0.6.1 \

--wait \

oci://ghcr.io/spinframework/charts/spin-operator

Uninstalling the Chart

To delete the spin-operator release, run:

# Uninstall Spin Operator using Helm

helm delete spin-operator --namespace spin-operator

This will remove all Kubernetes resources associated with the chart and deletes the Helm release.

To completely uninstall all resources related to spin-operator, you may want to delete the

corresponding CRD resources and the RuntimeClass:

kubectl delete -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.shim-executor.yaml

kubectl delete -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.runtime-class.yaml

kubectl delete -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.crds.yaml

2.4 - Installing on Azure Kubernetes Service

In this tutorial you’ll learn how to deploy SpinKube on Azure Kubernetes Service (AKS).

In this tutorial, you install Spin Operator on an Azure Kubernetes Service (AKS) cluster and deploy

a simple Spin application. You will learn how to:

- Deploy an AKS cluster

- Install Spin Operator Custom Resource Definition and Runtime Class

- Install and verify containerd shim via Kwasm

- Deploy a simple Spin App custom resource on your cluster

Prerequisites

Please ensure you have the following tools installed before continuing:

- kubectl - the Kubernetes CLI

- Helm - the package manager for Kubernetes

- Azure CLI - cross-platform CLI

for managing Azure resources

Provisioning the necessary Azure Infrastructure

Before you dive into deploying Spin Operator on Azure Kubernetes Service (AKS), the underlying cloud

infrastructure must be provisioned. For the sake of this article, you will provision a simple AKS

cluster. (Alternatively, you can setup the AKS cluster following this guide from

Microsoft.)

# Login with Azure CLI

az login

# Select the desired Azure Subscription

az account set --subscription <YOUR_SUBSCRIPTION>

# Create an Azure Resource Group

az group create --name rg-spin-operator \

--location germanywestcentral

# Create an AKS cluster

az aks create --name aks-spin-operator \

--resource-group rg-spin-operator \

--location germanywestcentral \

--node-count 1 \

--tier free \

--generate-ssh-keys

Once the AKS cluster has been provisioned, use the aks get-credentials command to download

credentials for kubectl:

# Download credentials for kubectl

az aks get-credentials --name aks-spin-operator \

--resource-group rg-spin-operator

For verification, you can use kubectl to browse common resources inside of the AKS cluster:

# Browse namespaces in the AKS cluster

kubectl get namespaces

NAME STATUS AGE

default Active 3m

kube-node-lease Active 3m

kube-public Active 3m

kube-system Active 3m

Deploying the Spin Operator

First, the Custom Resource Definition (CRD)

and the Runtime Class for wasmtime-spin-v2 must be

installed.

# Install the CRDs

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.crds.yaml

# Install the Runtime Class

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.runtime-class.yaml

The following installs cert-manager which is

required to automatically provision and manage TLS certificates (used by the admission webhook

system of Spin Operator)

# Install cert-manager CRDs

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.14.3/cert-manager.crds.yaml

# Add and update Jetstack repository

helm repo add jetstack https://charts.jetstack.io

helm repo update

# Install the cert-manager Helm chart

helm install cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--version v1.14.3

The Spin Operator chart also has a dependency on Kwasm, which you use to

install containerd-wasm-shim on the Kubernetes node(s):

# Add Helm repository if not already done

helm repo add kwasm http://kwasm.sh/kwasm-operator/

helm repo update

# Install KWasm operator

helm install \

kwasm-operator kwasm/kwasm-operator \

--namespace kwasm \

--create-namespace \

--set kwasmOperator.installerImage=ghcr.io/spinframework/containerd-shim-spin/node-installer:v0.22.0

# Provision Nodes

kubectl annotate node --all kwasm.sh/kwasm-node=true

To verify containerd-wasm-shim installation, you can inspect the logs from the Kwasm Operator:

# Inspect logs from the Kwasm Operator

kubectl logs -n kwasm -l app.kubernetes.io/name=kwasm-operator

{"level":"info","node":"aks-nodepool1-31687461-vmss000000","time":"2024-02-12T11:23:43Z","message":"Trying to Deploy on aks-nodepool1-31687461-vmss000000"}

{"level":"info","time":"2024-02-12T11:23:43Z","message":"Job aks-nodepool1-31687461-vmss000000-provision-kwasm is still Ongoing"}

{"level":"info","time":"2024-02-12T11:24:00Z","message":"Job aks-nodepool1-31687461-vmss000000-provision-kwasm is Completed. Happy WASMing"}

The following installs the chart with the release name spin-operator in the spin-operator

namespace:

helm install spin-operator \

--namespace spin-operator \

--create-namespace \

--version 0.6.1 \

--wait \

oci://ghcr.io/spinframework/charts/spin-operator

Lastly, create the shim executor::

kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.shim-executor.yaml

Deploying a Spin App to AKS

To validate the Spin Operator deployment, you will deploy a simple Spin App to the AKS cluster. The

following command will install a simple Spin App using the SpinApp CRD you provisioned in the

previous section:

# Deploy a sample Spin app

kubectl apply -f https://raw.githubusercontent.com/spinframework/spin-operator/main/config/samples/simple.yaml

Verifying the Spin App

Configure port forwarding from port 8080 of your local machine to port 80 of the Kubernetes

service which points to the Spin App you installed in the previous section:

kubectl port-forward services/simple-spinapp 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Send a HTTP request to http://127.0.0.1:8080/hello using

curl:

# Send an HTTP GET request to the Spin App

curl -iX GET http://localhost:8080/hello

HTTP/1.1 200 OK

transfer-encoding: chunked

date: Mon, 12 Feb 2024 12:23:52 GMT

Hello world from Spin!%

Removing the Azure infrastructure

To delete the Azure infrastructure created as part of this article, use the following command:

# Remove all Azure resources

az group delete --name rg-spin-operator \

--no-wait \

--yes

2.5 - Installing on Linode Kubernetes Engine (LKE)

This guide walks you through the process of installing SpinKube on

LKE.

This guide walks through the process of installing and configuring SpinKube on Linode Kubernetes

Engine (LKE).

Prerequisites

This guide assumes that you have an Akamai Linode account that is

configured and has sufficient permissions for creating a new LKE cluster.

You will also need recent versions of kubectl and helm installed on your system.

Creating an LKE Cluster

LKE has a managed control plane, so you only need to create the pool of worker nodes. In this

tutorial, we will create a 2-node LKE cluster using the smallest available worker nodes. This should

be fine for installing SpinKube and running up to around 100 Spin apps.

You may prefer to run a larger cluster if you plan on mixing containers and Spin apps, because

containers consume substantially more resources than Spin apps do.

In the Linode web console, click on Kubernetes in the right-hand navigation, and then click

Create Cluster.

You will only need to make a few choices on this screen. Here’s what we have done:

- Cluster name: We named the cluster

spinkube-lke-1. You should name it according to whatever

convention you prefer. - Region: We chose the

Chicago, IL (us-ord) region, but you can choose any region you prefer. - Kubernetes Version: The latest supported Kubernetes version is

1.30, so we chose that. - High Availability Control Plane: For this testing cluster, we chose

No on HA Control Plane

because we do not need high availability. - Node Pool configuration: In

Add Node Pools, we added two Dedicated 4 GB simply to show a

cluster running more than one node. Two nodes is sufficient for Spin apps, though you may prefer

the more traditional 3 node cluster. Click Add to add these, and ignore the warning about

minimum sizes.

Once you have set things to your liking, click Create Cluster.

This will take you to a screen that shows the status of the cluster. Initially, you will want to

wait for all of your Node Pool to start up. Once all of the nodes are online, download the

kubeconfig file, which will be named something like spinkube-lke-1-kubeconfig.yaml.

The kubeconfig file will have the credentials for connecting to your new LKE cluster. Do not

share that file or put it in a public place.

For all of the subsequent operations, you will want to use the spinkube-lke-1-kubeconfig.yaml as

your main Kubernetes configuration file. The best way to do that is to set the environment variable

KUBECONFIG to point to that file:

$ export KUBECONFIG=/path/to/spinkube-lke-1-kubeconfig.yaml

You can test this using the command kubectl config view:

$ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://REDACTED.us-ord-1.linodelke.net:443

name: lke203785

contexts:

- context:

cluster: lke203785

namespace: default

user: lke203785-admin

name: lke203785-ctx

current-context: lke203785-ctx

kind: Config

preferences: {}

users:

- name: lke203785-admin

user:

token: REDACTED

This shows us our cluster config. You should be able to cross-reference the lkeNNNNNN version with

what you see on your Akamai Linode dashboard.

Install SpinKube Using Helm

At this point, install SpinKube with Helm. As long as your KUBECONFIG

environment variable is pointed at the correct cluster, the installation method documented there

will work.

Once you are done following the installation steps, return here to install a first app.

Creating a First App

We will use the spin kube plugin to scaffold out a new app. If you run the following command and

the kube plugin is not installed, you will first be prompted to install the plugin. Choose yes

to install.

We’ll point to an existing Spin app, a Hello World program written in

Rust, compiled to Wasm, and stored in

GitHub Container Registry (GHCR):

$ spin kube scaffold --from ghcr.io/spinkube/containerd-shim-spin/examples/spin-rust-hello:v0.13.0 > hello-world.yaml

Note that Spin apps, which are WebAssembly, can be stored in most container

registries even though they are not

Docker containers.

This will write the following to hello-world.yaml:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: spin-rust-hello

spec:

image: "ghcr.io/spinkube/containerd-shim-spin/examples/spin-rust-hello:v0.13.0"

executor: containerd-shim-spin

replicas: 2

Using kubectl apply, we can deploy that app:

$ kubectl apply -f hello-world.yaml

spinapp.core.spinkube.dev/spin-rust-hello created

With SpinKube, SpinApps will be deployed as Pod resources, so we can see the app using kubectl get pods:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

spin-rust-hello-f6d8fc894-7pq7k 1/1 Running 0 54s

spin-rust-hello-f6d8fc894-vmsgh 1/1 Running 0 54s

Status is listed as Running, which means our app is ready.

Making An App Public with a NodeBalancer

By default, Spin apps will be deployed with an internal service. But with Linode, you can provision

a NodeBalancer using a Service

object. Here is a hello-world-service.yaml that provisions a nodebalancer for us:

apiVersion: v1

kind: Service

metadata:

name: spin-rust-hello-nodebalancer

annotations:

service.beta.kubernetes.io/linode-loadbalancer-throttle: "4"

labels:

core.spinkube.dev/app-name: spin-rust-hello

spec:

type: LoadBalancer

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

core.spinkube.dev/app.spin-rust-hello.status: ready

sessionAffinity: None

When LKE receives a Service whose type is LoadBalancer, it will provision a NodeBalancer for

you.

You can customize this for your app simply by replacing all instances of spin-rust-hello with

the name of your app.

We can create the NodeBalancer by running kubectl apply on the above file:

$ kubectl apply -f hello-world-nodebalancer.yaml

service/spin-rust-hello-nodebalancer created

Provisioning the new NodeBalancer may take a few moments, but we can get the IP address using

kubectl get service spin-rust-hello-nodebalancer:

$ get service spin-rust-hello-nodebalancer

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

spin-rust-hello-nodebalancer LoadBalancer 10.128.235.253 172.234.210.123 80:31083/TCP 40s

The EXTERNAL-IP field tells us what the NodeBalancer is using as a public IP. We can now test this

out over the Internet using curl or by entering the URL http://172.234.210.123/hello into your

browser.

$ curl 172.234.210.123/hello

Hello world from Spin!

Deleting Our App

To delete this sample app, we will first delete the NodeBalancer, and then delete the app:

$ kubectl delete service spin-rust-hello-nodebalancer

service "spin-rust-hello-nodebalancer" deleted

$ kubectl delete spinapp spin-rust-hello

spinapp.core.spinkube.dev "spin-rust-hello" deleted

If you delete the NodeBalancer out of the Linode console, it will not automatically delete the

Service record in Kubernetes, which will cause inconsistencies. So it is best to use kubectl delete service to delete your NodeBalancer.

If you are also done with your LKE cluster, the easiest way to delete it is to log into the Akamai

Linode dashboard, navigate to Kubernetes, and press the Delete button. This will destroy all of

your worker nodes and deprovision the control plane.

2.6 - Installing on Rancher Desktop

This tutorial shows how to integrate SpinKube and Rancher Desktop.

Rancher Desktop is an open-source application that provides all the

essentials to work with containers and Kubernetes on your desktop.

Prerequisites

- An operating system compatible with Rancher Desktop (Windows, macOS, or Linux).

- Administrative or superuser access on your computer.

Step 1: Installing Rancher Desktop

- Download Rancher Desktop:

- Navigate to the Rancher Desktop releases

page. We tested this documentation with Rancher Desktop

1.19.1 with Spin v3.2.0, but it should work with more recent versions. - Select the appropriate installer for your operating system.

- Install Rancher Desktop:

- Run the downloaded installer and follow the on-screen instructions to complete the

installation.

- Open Rancher Desktop

- Configure

containerd as your container runtime engine (under Preferences -> Container Engine). - Make sure that the Enable Wasm option is checked in the Preferences → Container Engine

section. Remember to always apply your changes.

- Navigate to the Preferences -> Kubernetes menu.

- Enable Kubernetes, Traefik and Spin Operator (under Preferences -> Kubernetes ensure that the Enable Kubernetes, Enable Traefik and Install Spin Operator options are checked.)

Make sure to select rancher-desktop from your Kubernetes contexts: kubectl config use-context rancher-desktop

Once your changes have been applied, go to the Cluster Dashboard -> Workloads. You should see the spin-operator-controller-manager deployed to the spin-operator namespace.

Step 3: Creating a Spin Application

- Open a terminal (Command Prompt, Terminal, or equivalent based on your OS).

- Create a new Spin application: This command creates a new Spin application using the

http-js template, named hello-k3s.

$ spin new -t http-js hello-k3s --accept-defaults

$ cd hello-k3s

- We can edit the

/src/index.js file and make the workload return a string “Hello from Rancher Desktop”:

import { AutoRouter } from 'itty-router';

let router = AutoRouter();

router

.get("/", () => new Response("Hello from Rancher Desktop")) // <-- this changed

.get('/hello/:name', ({ name }) => `Hello, ${name}!`)

addEventListener('fetch', (event) => {

event.respondWith(router.fetch(event.request));

});

Step 4: Deploying Your Application

- Push the application to a registry:

$ npm install

$ spin build

$ spin registry push ttl.sh/hello-k3s:0.1.0

Replace ttl.sh/hello-k3s:0.1.0 with your registry URL and tag.

- Deploy your Spin application to the cluster:

Use the spin-kube plugin to deploy your application to the cluster

spin kube scaffold --from ttl.sh/hello-k3s:0.1.0 | kubectl apply -f -

If we click on the Rancher Desktop’s “Cluster Dashboard”, we can see hello-k3s is running inside

the “Workloads” dropdown section:

To access our app outside of the cluster, we can forward the port so that we access the application

from our host machine:

$ kubectl port-forward svc/hello-k3s 8083:80

To test locally, we can make a request as follows:

$ curl localhost:8083

Hello from Rancher Desktop

The above curl command or a quick visit to your browser at localhost:8083 will return the “Hello

from Rancher Desktop” message:

2.7 - Installing on Microk8s

This guide walks you through the process of installing SpinKube using

Microk8s.

This guide walks through the process of installing and configuring Microk8s and SpinKube.

Prerequisites

This guide assumes you are running Ubuntu 24.04, and that you have Snap enabled (which is the

default).

The testing platform for this installation was an Akamai Edge Linode running 4G of memory and 2

cores.

Installing Spin

You will need to install Spin. The easiest way is

to just use the following one-liner to get the latest version of Spin:

$ curl -fsSL https://developer.fermyon.com/downloads/install.sh | bash

Typically you will then want to move spin to /usr/local/bin or somewhere else on your $PATH:

$ sudo mv spin /usr/local/bin/spin

You can test that it’s on your $PATH with which spin. If this returns blank, you will need to

adjust your $PATH variable or put Spin somewhere that is already on $PATH.

A Script To Do This

If you would rather work with a shell script, you may find this

Gist

a great place to start. It installs Microk8s and SpinKube, and configures both.

Installing Microk8s on Ubuntu

Use snap to install microk8s:

$ sudo snap install microk8s --classic

This will install Microk8s and start it. You may want to read the official installation

instructions before proceeding. Wait for a moment or two,

and then ensure Microk8s is running with the microk8s status command.

Next, enable the TLS certificate manager:

$ microk8s enable cert-manager

Now we’re ready to install the SpinKube environment for running Spin applications.

Installing SpinKube

SpinKube provides the entire toolkit for running Spin serverless apps. You may want to familiarize

yourself with the SpinKube quickstart guide

before proceeding.

First, we need to apply a runtime class and a CRD for SpinKube:

$ microk8s kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.runtime-class.yaml

$ microk8s kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.crds.yaml

Both of these should apply immediately.

We then need to install KWasm because it is not yet included with Microk8s:

$ microk8s helm repo add kwasm http://kwasm.sh/kwasm-operator/

$ microk8s helm install kwasm-operator kwasm/kwasm-operator --namespace kwasm --create-namespace --set kwasmOperator.installerImage=ghcr.io/spinframework/containerd-shim-spin/node-installer:v0.22.0

$ microk8s kubectl annotate node --all kwasm.sh/kwasm-node=true

The last line above tells Microk8s that all nodes on the cluster (which is just one node in this

case) can run Spin applications.

Next, we need to install SpinKube’s operator using Helm (which is included with Microk8s).

$ microk8s helm install spin-operator --namespace spin-operator --create-namespace --version 0.6.1 --wait oci://ghcr.io/spinframework/charts/spin-operator

Now we have the main operator installed. There is just one more step. We need to install the shim

executor, which is a special CRD that allows us to use multiple executors for WebAssembly.

$ microk8s kubectl apply -f https://github.com/spinframework/spin-operator/releases/download/v0.6.1/spin-operator.shim-executor.yaml

Now SpinKube is installed!

Running an App in SpinKube

Next, we can run a simple Spin application inside of Microk8s.

While we could write regular deployments or pod specifications, the easiest way to deploy a Spin app

is by creating a simple SpinApp resource. Let’s use the simple example from SpinKube:

$ microk8s kubectl apply -f https://raw.githubusercontent.com/spinframework/spin-operator/main/config/samples/simple.yaml

The above installs a simple SpinApp YAML that looks like this:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: simple-spinapp

spec:

image: "ghcr.io/spinkube/containerd-shim-spin/examples/spin-rust-hello:v0.13.0"

replicas: 1

executor: containerd-shim-spin

You can read up on the definition in the

documentation.

It may take a moment or two to get started, but you should be able to see the app with microk8s kubectl get pods.

$ microk8s kubectl get po

NAME READY STATUS RESTARTS AGE

simple-spinapp-5c7b66f576-9v9fd 1/1 Running 0 45m

Troubleshooting

If STATUS gets stuck in ContainerCreating, it is possible that KWasm did not install correctly.

Try doing a microk8s stop, waiting a few minutes, and then running microk8s start. You can also

try the command:

$ microk8s kubectl logs -n kwasm -l app.kubernetes.io/name=kwasm-operator

Testing the Spin App

The easiest way to test our Spin app is to port forward from the Spin app to the outside host:

$ microk8s kubectl port-forward services/simple-spinapp 8080:80

You can then run curl localhost:8080/hello

$ curl localhost:8080/hello

Hello world from Spin!

Where to go from here

So far, we installed Microk8s, SpinKube, and a single Spin app. To have a more production-ready

version, you might want to:

Bonus: Configuring Microk8s ingress

Microk8s includes an NGINX-based ingress controller that works great with Spin applications.

Enable the ingress controller: microk8s enable ingress

Now we can create an ingress that routes our traffic to the simple-spinapp app. Create the file

ingress.yaml with the following content. Note that the service.name is

the name of our Spin app.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: http-ingress

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: simple-spinapp

port:

number: 80

Install the above with microk8s kubectl -f ingress.yaml. After a moment or two, you should be able

to run curl [localhost](http://localhost) and see Hello World!.

Conclusion

In this guide we’ve installed Spin, Microk8s, and SpinKube and then run a Spin application.

To learn more about the many things you can do with Spin apps, go to the Spin developer

docs. You can also look at a variety of examples at Spin Up

Hub.

Or to try out different Kubernetes configurations, check out other installation

guides.

2.8 - Executor Compatibility Matrices

A set of compatibility matrices for each SpinKube executor

containerd-shim-spin Executor

The Spin containerd shim project is a containerd shim implementation for Spin.

Spin Operator and Shim Feature Map

If a feature is configured in a SpinApp that is not supported in the version of the shim being

used, the application may not execute as expected. The following maps out the versions of the Spin

containerd shim, Spin Operator, and spin kube

plugin that have support for specific features.

| Feature | SpinApp Field | Shim Version | Spin Operator Version | spin kube Plugin Version |

|---|

| OTEL Traces | otel | v0.15.0 | v0.3.0 | NA |

| Selective Deployment | components | v0.17.0 | v0.4.0 | v0.3.0 |

| Invocation Limits | invocationLimits | v0.20.0 | v0.6.0 | NA |

NA indicates that the feature in not available yet in that project

Spin and Spin Containerd Shim Version Map

For tracking the availability of Spin features and compatibility of Spin SDKs, the following

indicates which versions of the Spin runtime the Spin containerd

shim uses.

3 - Using SpinKube

Introductions to all the key parts of SpinKube you’ll need to know.

3.1 - Selective Deployments in Spin

Learn how to deploy a subset of components from your SpinApp using Selective Deployments.

This article explains how to selectively deploy a subset of components from your Spin App using Selective Deployments. You will learn how to:

- Scaffold a Specific Component from a Spin Application into a Custom Resource

- Run a Selective Deployment

Selective Deployments allow you to control which components within a Spin app are active for a specific instance of the app. With Component Selectors, Spin and SpinKube can declare at runtime which components should be activated, letting you deploy a single, versioned artifact while choosing which parts to enable at startup. This approach separates developer goals (building a well-architected app) from operational needs (optimizing for specific infrastructure).

Prerequisites

For this tutorial, you’ll need:

Scaffold a Specific Component from a Spin Application into a Custom Resource

We’ll use a sample application called “Salutations”, which demonstrates greetings via two components, each responding to a unique HTTP route. If we take a look at the application manifest, we’ll see that this Spin application is comprised of two components:

Hello component triggered by the /hi routeGoodbye component triggered by the /bye route

spin_manifest_version = 2

[application]

name = "salutations"

version = "0.1.0"

authors = ["Kate Goldenring <kate.goldenring@fermyon.com>"]

description = "An app that gives salutations"

[[trigger.http]]

route = "/hi"

component = "hello"

[component.hello]

source = "../hello-world/main.wasm"

allowed_outbound_hosts = []

[component.hello.build]

command = "cd ../hello-world && tinygo build -target=wasi -gc=leaking -no-debug -o main.wasm main.go"

watch = ["**/*.go", "go.mod"]

[[trigger.http]]

route = "/bye"

component = "goodbye"

[component.goodbye]

source = "main.wasm"

allowed_outbound_hosts = []

[component.goodbye.build]

command = "tinygo build -target=wasi -gc=leaking -no-debug -o main.wasm main.go"

watch = ["**/*.go", "go.mod"]

With Selective Deployments, you can choose to deploy only specific components without modifying the source code. For this example, we’ll deploy just the hello component.

Note that if you had an Spin application with more than two components, you could choose to deploy multiple components selectively.

To Selectively Deploy, we first need to turn our application into a SpinApp Custom Resource with the spin kube scaffold command, using the optional --component field to specify which component we’d like to deploy:

spin kube scaffold --from ghcr.io/spinkube/spin-operator/salutations:20241105-223428-g4da3171 --component hello --replicas 1 --out spinapp.yaml

Now if we take a look at our spinapp.yaml, we should see that only the hello component will be deployed via Selective Deployments:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: salutations

spec:

image: "ghcr.io/spinkube/spin-operator/salutations:20241105-223428-g4da3171"

executor: containerd-shim-spin

replicas: 1

components:

- hello

Run a Selective Deployment

Now you can deploy your app using kubectl as you normally would:

# Deploy the spinapp.yaml using kubectl

kubectl apply -f spinapp.yaml

spinapp.core.spinkube.dev/salutations created

We can test that only our hello component is running by port-forwarding its service.

kubectl port-forward svc/salutations 8083:80

Now let’s call the /hi route in a seperate terminal:

If the hello component is running correctly, we should see a response of “Hello Fermyon!”:

Next, let’s try the /bye route. This should return nothing, confirming that only the hello component was deployed:

There you have it! You selectively deployed a subset of your Spin application to SpinKube with no modifications to your source code. This approach lets you easily deploy only the components you need, which can improve efficiency in environments where only specific services are required.

3.2 - Packaging and deploying apps

Learn how to package and distribute Spin Apps using either public or private OCI compliant registries.

This article explains how Spin Apps are packaged and distributed via both public and private

registries. You will learn how to:

- Package and distribute Spin Apps

- Deploy Spin Apps

- Scaffold Kubernetes Manifests for Spin Apps

- Use private registries that require authentication

Prerequisites

For this tutorial in particular, you need

Creating a new Spin App

You use the spin CLI, to create a new Spin App. The spin CLI provides different templates, which

you can use to quickly create different kinds of Spin Apps. For demonstration purposes, you will use

the http-go template to create a simple Spin App.

# Create a new Spin App using the http-go template

spin new --accept-defaults -t http-go hello-spin

# Navigate into the hello-spin directory

cd hello-spin

The spin CLI created all necessary files within hello-spin. Besides the Spin Manifest

(spin.toml), you can find the actual implementation of the app in main.go:

package main

import (

"fmt"

"net/http"

spinhttp "github.com/fermyon/spin/sdk/go/v2/http"

)

func init() {

spinhttp.Handle(func(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Content-Type", "text/plain")

fmt.Fprintln(w, "Hello Fermyon!")

})

}

func main() {}

This implementation will respond to any incoming HTTP request, and return an HTTP response with a

status code of 200 (Ok) and send Hello Fermyon as the response body.

You can test the app on your local machine by invoking the spin up command from within the

hello-spin folder.

Packaging and Distributing Spin Apps

Spin Apps are packaged and distributed as OCI artifacts. By leveraging OCI artifacts, Spin Apps can

be distributed using any registry that implements the Open Container Initiative Distribution

Specification (a.k.a. “OCI Distribution

Spec”).

The spin CLI simplifies packaging and distribution of Spin Apps and provides an atomic command for

this (spin registry push). You can package and distribute the hello-spin app that you created as

part of the previous section like this:

# Package and Distribute the hello-spin app

spin registry push --build ttl.sh/hello-spin:24h

It is a good practice to add the --build flag to spin registry push. It prevents you from

accidentally pushing an outdated version of your Spin App to your registry of choice.

Deploying Spin Apps

To deploy Spin Apps to a Kubernetes cluster which has Spin Operator running, you use the kube

plugin for spin. Use the spin kube deploy command as shown here to deploy the hello-spin app

to your Kubernetes cluster:

# Deploy the hello-spin app to your Kubernetes Cluster

spin kube deploy --from ttl.sh/hello-spin:24h

spinapp.core.spinkube.dev/hello-spin created

You can deploy a subset of components in your Spin Application using Selective Deployments.

Scaffolding Spin Apps

In the previous section, you deployed the hello-spin app using the spin kube deploy command.

Although this is handy, you may want to inspect, or alter the Kubernetes manifests before applying

them. You use the spin kube scaffold command to generate Kubernetes manifests:

spin kube scaffold --from ttl.sh/hello-spin:24h

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: hello-spin

spec:

image: "ttl.sh/hello-spin:24h"

replicas: 2

By default, the command will print all Kubernetes manifests to STDOUT. Alternatively, you can

specify the out argument to store the manifests to a file:

# Scaffold manifests to spinapp.yaml

spin kube scaffold --from ttl.sh/hello-spin:24h \

--out spinapp.yaml

# Print contents of spinapp.yaml

cat spinapp.yaml

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: hello-spin

spec:

image: "ttl.sh/hello-spin:24h"

replicas: 2

You can then deploy the Spin App by applying the manifest with the kubectl CLI:

kubectl apply -f spinapp.yaml

Distributing and Deploying Spin Apps via private registries

It is quite common to distribute Spin Apps through private registries that require some sort of

authentication. To publish a Spin App to a private registry, you have to authenticate using the

spin registry login command.

For demonstration purposes, you will now distribute the Spin App via GitHub Container Registry

(GHCR). You can follow this guide by

GitHub

to create a new personal access token (PAT), which is required for authentication.

# Store PAT and GitHub username as environment variables

export GH_PAT=YOUR_TOKEN

export GH_USER=YOUR_GITHUB_USERNAME

# Authenticate spin CLI with GHCR

echo $GH_PAT | spin registry login ghcr.io -u $GH_USER --password-stdin

Successfully logged in as YOUR_GITHUB_USERNAME to registry ghcr.io

Once authentication succeeded, you can use spin registry push to push your Spin App to GHCR:

# Push hello-spin to GHCR

spin registry push --build ghcr.io/$GH_USER/hello-spin:0.0.1

Pushing app to the Registry...

Pushed with digest sha256:1611d51b296574f74b99df1391e2dc65f210e9ea695fbbce34d770ecfcfba581

In Kubernetes you store authentication information as secret of type docker-registry. The

following snippet shows how to create such a secret with kubectl leveraging the environment

variables, you specified in the previous section:

# Create Secret in Kubernetes

kubectl create secret docker-registry ghcr \

--docker-server ghcr.io \

--docker-username $GH_USER \

--docker-password $CR_PAT

secret/ghcr created

Scaffold the necessary SpinApp Custom Resource (CR) using spin kube scaffold:

# Scaffold the SpinApp manifest

spin kube scaffold --from ghcr.io/$GH_USER/hello-spin:0.0.1 \

--out spinapp.yaml

Before deploying the manifest with kubectl, update spinapp.yaml and link the ghcr secret you

previously created using the imagePullSecrets property. Your SpinApp manifest should look like

this:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: hello-spin

spec:

image: ghcr.io/$GH_USER/hello-spin:0.0.1

imagePullSecrets:

- name: ghcr

replicas: 2

executor: containerd-shim-spin

$GH_USER should match the actual username provided while running through the previous sections

of this article

Finally, you can deploy the app using kubectl apply:

# Deploy the spinapp.yaml using kubectl

kubectl apply -f spinapp.yaml

spinapp.core.spinkube.dev/hello-spin created

3.3 - Making HTTPS Requests

Configure Spin Apps to allow HTTPS requests.

To enable HTTPS requests, the executor must be configured to use certificates. SpinKube can be configured to use either default or custom certificates.

If you make a request without properly configured certificates, you’ll encounter an error message that reads: error trying to connect: unexpected EOF (unable to get local issuer certificate).

Using default certificates

SpinKube can generate a default CA certificate bundle by setting installDefaultCACerts to true. This creates a secret named spin-ca populated with curl’s default bundle. You can specify a custom secret name by setting caCertSecret.

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinAppExecutor

metadata:

name: containerd-shim-spin

spec:

createDeployment: true

deploymentConfig:

runtimeClassName: wasmtime-spin-v2

installDefaultCACerts: true

Apply the executor using kubectl:

kubectl apply -f myexecutor.yaml

Using custom certificates

Create a secret from your certificate file:

kubectl create secret generic my-custom-ca --from-file=ca-certificates.crt

Configure the executor to use the custom certificate secret:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinAppExecutor

metadata:

name: containerd-shim-spin

spec:

createDeployment: true

deploymentConfig:

runtimeClassName: wasmtime-spin-v2

caCertSecret: my-custom-ca

Apply the executor using kubectl:

kubectl apply -f myexecutor.yaml

3.4 - Assigning variables

Configure Spin Apps using values from Kubernetes ConfigMaps and Secrets.

By using variables, you can alter application behavior without recompiling your SpinApp. When

running in Kubernetes, you can either provide constant values for variables, or reference them from

Kubernetes primitives such as ConfigMaps and Secrets. This tutorial guides your through the

process of assigning variables to your SpinApp.

Note: If you’d like to learn how to configure your application with an external variable provider

like Vault or Azure Key

Vault, see the External Variable Provider

guide

Build and Store SpinApp in an OCI Registry

We’re going to build the SpinApp and store it inside of a ttl.sh registry. Move

into the

apps/variable-explorer

directory and build the SpinApp we’ve provided:

# Build and publish the sample app

cd apps/variable-explorer

spin build

spin registry push ttl.sh/variable-explorer:1h

Note that the tag at the end of ttl.sh/variable-explorer:1h

indicates how long the image will last e.g. 1h (1 hour). The maximum is 24h and you will need to

repush if ttl exceeds 24 hours.

For demonstration purposes, we use the variable

explorer sample app. It

reads three different variables (log_level, platform_name and db_password) and prints their

values to the STDOUT stream as shown in the following snippet:

let log_level = variables::get("log_level")?;

let platform_name = variables::get("platform_name")?;

let db_password = variables::get("db_password")?;

println!("# Log Level: {}", log_level);

println!("# Platform name: {}", platform_name);

println!("# DB Password: {}", db_password);

Those variables are defined as part of the Spin manifest (spin.toml), and access to them is

granted to the variable-explorer component:

[variables]

log_level = { default = "WARN" }

platform_name = { default = "Fermyon Cloud" }

db_password = { required = true }

[component.variable-explorer.variables]

log_level = "{{ log_level }}"

platform_name = "{{ platform_name }}"

db_password = "{{ db_password }}"

For further reading on defining variables in the Spin manifest, see the Spin Application Manifest

Reference.

Configuration data in Kubernetes

In Kubernetes, you use ConfigMaps for storing non-sensitive, and Secrets for storing sensitive

configuration data. The deployment manifest (config/samples/variable-explorer.yaml) contains

specifications for both a ConfigMap and a Secret:

kind: ConfigMap

apiVersion: v1

metadata:

name: spinapp-cfg

data:

logLevel: INFO

---

kind: Secret

apiVersion: v1

metadata:

name: spinapp-secret

data:

password: c2VjcmV0X3NhdWNlCg==

Assigning variables to a SpinApp

When creating a SpinApp, you can choose from different approaches for specifying variables:

- Providing constant values

- Loading configuration values from ConfigMaps

- Loading configuration values from Secrets

The SpinApp specification contains the variables array, that you use for specifying variables

(See kubectl explain spinapp.spec.variables).

The deployment manifest (config/samples/variable-explorer.yaml) specifies a static value for

platform_name. The value of log_level is read from the ConfigMap called spinapp-cfg, and the

db_password is read from the Secret called spinapp-secret:

kind: SpinApp

apiVersion: core.spinkube.dev/v1alpha1

metadata:

name: variable-explorer

spec:

replicas: 1

image: ttl.sh/variable-explorer:1h

executor: containerd-shim-spin

variables:

- name: platform_name

value: Kubernetes

- name: log_level

valueFrom:

configMapKeyRef:

name: spinapp-cfg

key: logLevel

optional: true

- name: db_password

valueFrom:

secretKeyRef:

name: spinapp-secret

key: password

optional: false

As the deployment manifest outlines, you can use the optional property - as you would do when

specifying environment variables for a regular Kubernetes Pod - to control if Kubernetes should

prevent starting the SpinApp, if the referenced configuration source does not exist.

You can deploy all resources by executing the following command:

kubectl apply -f config/samples/variable-explorer.yaml

configmap/spinapp-cfg created

secret/spinapp-secret created

spinapp.core.spinkube.dev/variable-explorer created

Inspecting runtime logs of your SpinApp

To verify that all variables are passed correctly to the SpinApp, you can configure port forwarding

from your local machine to the corresponding Kubernetes Service:

kubectl port-forward services/variable-explorer 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

When port forwarding is established, you can send an HTTP request to the variable-explorer from

within an additional terminal session:

curl http://localhost:8080

Hello from Kubernetes

Finally, you can use kubectl logs to see all logs produced by the variable-explorer at runtime:

kubectl logs -l core.spinkube.dev/app-name=variable-explorer

# Log Level: INFO

# Platform Name: Kubernetes

# DB Password: secret_sauce

3.5 - External Variable Providers

Configure external variable providers for your Spin App.

In the Assigning Variables guide, you learned how to configure variables

on the SpinApp via its variables section,

either by supplying values in-line or via a Kubernetes ConfigMap or Secret.

You can also utilize an external service like Vault or Azure Key

Vault to provide variable values for your

application. This guide will show you how to use and configure both services in tandem with

corresponding sample applications.

Prerequisites

To follow along with this tutorial, you’ll need:

Supported providers

Spin currently supports Vault and Azure Key Vault as

external variable providers. Configuration is supplied to the application via a Runtime

Configuration

file.

In SpinKube, this configuration file can be supplied in the form of a Kubernetes secret and linked

to a SpinApp via its

runtimeConfig.loadFromSecret

section.

Note: loadFromSecret takes precedence over any other runtimeConfig configuration. Thus, all

runtime configuration must be contained in the Kubernetes secret, including

SQLite, Key

Value and

LLM options that might otherwise be

specified via their dedicated specs.

Let’s look at examples utilizing specific provider configuration next.

Vault provider

Vault is a popular choice for storing secrets and serving as a secure

key-value store.

This guide assumes you have:

Build and publish the Spin application

We’ll use the variable explorer

app to test this

integration.

First, clone the repository locally and navigate to the variable-explorer directory:

git clone git@github.com:spinkube/spin-operator.git

cd apps/variable-explorer

Now, build and push the application to a registry you have access to. Here we’ll use

ttl.sh:

spin build

spin registry push ttl.sh/variable-explorer:1h

Create the runtime-config.toml file

Here’s a sample runtime-config.toml file containing Vault provider configuration:

[[config_provider]]

type = "vault"

url = "https://my-vault-server:8200"

token = "my_token"

mount = "admin/secret"

To use this sample, you’ll want to update the url and token fields with values applicable to

your Vault cluster. The mount value will depend on the Vault namespace and kv-v2 secrets engine

name. In this sample, the namespace is admin and the engine is named secret, eg by running

vault secrets enable --path=secret kv-v2.

Create the secrets in Vault

Create the log_level, platform_name and db_password secrets used by the variable-explorer

application in Vault:

vault kv put secret/log_level value=INFO

vault kv put secret/platform_name value=Kubernetes

vault kv put secret/db_password value=secret_sauce

Create the SpinApp and Secret

Next, scaffold the SpinApp and Secret resource (containing the runtime-config.toml data) together

in one go via the kube plugin:

spin kube scaffold -f ttl.sh/variable-explorer:1h -c runtime-config.toml -o scaffold.yaml

Deploy the application

kubectl apply -f scaffold.yaml

Test the application

You are now ready to test the application and verify that all variables are passed correctly to the

SpinApp from the Vault provider.

Configure port forwarding from your local machine to the corresponding Kubernetes Service:

kubectl port-forward services/variable-explorer 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

When port forwarding is established, you can send an HTTP request to the variable-explorer from

within an additional terminal session:

curl http://localhost:8080

Hello from Kubernetes

Finally, you can use kubectl logs to see all logs produced by the variable-explorer at runtime:

kubectl logs -l core.spinkube.dev/app-name=variable-explorer

# Log Level: INFO

# Platform Name: Kubernetes

# DB Password: secret_sauce

Azure Key Vault provider

Azure Key Vault is a secure secret store for

distributed applications hosted on the Azure platform.

This guide assumes you have:

Build and publish the Spin application

We’ll use the Azure Key Vault

Provider

sample application for this exercise.

First, clone the repository locally and navigate to the azure-key-vault-provider directory:

git clone git@github.com:fermyon/enterprise-architectures-and-patterns.git

cd enterprise-architectures-and-patterns/application-variable-providers/azure-key-vault-provider

Now, build and push the application to a registry you have access to. Here we’ll use

ttl.sh:

spin build

spin registry push ttl.sh/azure-key-vault-provider:1h

The next steps will guide you in creating and configuring an Azure Key Vault and populating the

runtime configuration file with connection credentials.

Deploy Azure Key Vault

# Variable Definition

KV_NAME=spinkube-keyvault

LOCATION=westus2

RG_NAME=rg-spinkube-keyvault

# Create Azure Resource Group and Azure Key Vault

az group create -n $RG_NAME -l $LOCATION

az keyvault create -n $KV_NAME \

-g $RG_NAME \

-l $LOCATION \

--enable-rbac-authorization true

# Grab the Azure Resource Identifier of the Azure Key Vault instance

KV_SCOPE=$(az keyvault show -n $KV_NAME -g $RG_NAME -otsv --query "id")

Add a Secret to the Azure Key Vault instance

# Grab the ID of the currently signed in user in Azure CLI

CURRENT_USER_ID=$(az ad signed-in-user show -otsv --query "id")

# Make the currently signed in user a Key Vault Secrets Officer

# on the scope of the new Azure Key Vault instance

az role assignment create --assignee $CURRENT_USER_ID \

--role "Key Vault Secrets Officer" \

--scope $KV_SCOPE

# Create a test secret called 'secret` in the Azure Key Vault instance

az keyvault secret set -n secret --vault-name $KV_NAME --value secret_value -o none

Create a Service Principal and Role Assignment for Spin

SP_NAME=sp-spinkube-keyvault

SP=$(az ad sp create-for-rbac -n $SP_NAME -ojson)

CLIENT_ID=$(echo $SP | jq -r '.appId')

CLIENT_SECRET=$(echo $SP | jq -r '.password')

TENANT_ID=$(echo $SP | jq -r '.tenant')

az role assignment create --assignee $CLIENT_ID \

--role "Key Vault Secrets User" \

--scope $KV_SCOPE

Create the runtime-config.toml file

Create a runtime-config.toml file with the following contents, substituting in the values for

KV_NAME, CLIENT_ID, CLIENT_SECRET and TENANT_ID from the previous steps.

[[config_provider]]

type = "azure_key_vault"

vault_url = "https://<$KV_NAME>.vault.azure.net/"

client_id = "<$CLIENT_ID>"

client_secret = "<$CLIENT_SECRET>"

tenant_id = "<$TENANT_ID>"

authority_host = "AzurePublicCloud"

Create the SpinApp and Secret

Scaffold the SpinApp and Secret resource (containing the runtime-config.toml data) together in one

go via the kube plugin:

spin kube scaffold -f ttl.sh/azure-key-vault-provider:1h -c runtime-config.toml -o scaffold.yaml

Deploy the application

kubectl apply -f scaffold.yaml

Test the application

Now you are ready to test the application and verify that the secret resolves its value from Azure

Key Vault.

Configure port forwarding from your local machine to the corresponding Kubernetes Service:

kubectl port-forward services/azure-key-vault-provider 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

When port forwarding is established, you can send an HTTP request to the azure-key-vault-provider

app from within an additional terminal session:

curl http://localhost:8080

Loaded secret from Azure Key Vault: secret_value

3.6 - Connecting to your app

Learn how to connect to your application.

This topic guide shows you how to connect to your application deployed to SpinKube, including how to

use port-forwarding for local development, or Ingress rules for a production setup.

Run the sample application

Let’s deploy a sample application to your Kubernetes cluster. We will use this application

throughout the tutorial to demonstrate how to connect to it.

Refer to the quickstart guide if you haven’t set up a Kubernetes cluster

yet.

kubectl apply -f https://raw.githubusercontent.com/spinkube/spin-operator/main/config/samples/simple.yaml

When SpinKube deploys the application, it creates a Kubernetes Service that exposes the application

to the cluster. You can check the status of the deployment with the following command:

You should see a service named simple-spinapp with a type of ClusterIP. This means that the

service is only accessible from within the cluster.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

simple-spinapp ClusterIP 10.43.152.184 <none> 80/TCP 1m

We will use this service to connect to your application.

Port forwarding

This option is useful for debugging and development. It allows you to forward a local port to the

service.

Forward port 8083 to the service so that it can be reached from your computer:

kubectl port-forward svc/simple-spinapp 8083:80

You should be able to reach it from your browser at http://localhost:8083:

curl http://localhost:8083

You should see a message like “Hello world from Spin!”.

This is one of the simplest ways to test your application. However, it is not suitable for

production use. The next section will show you how to expose your application to the internet using

an Ingress controller.

Ingress

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster.

Traffic routing is controlled by rules defined on the Ingress resource.

Here is a simple example where an Ingress sends all its traffic to one Service:

(source: Kubernetes

documentation)

An Ingress may be configured to give applications externally-reachable URLs, load balance traffic,

terminate SSL / TLS, and offer name-based virtual hosting. An Ingress controller is responsible for

fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router

or additional frontends to help handle the traffic.

Prerequisites

You must have an Ingress

controller to satisfy

an Ingress rule. Creating an Ingress rule without a controller has no effect.

Ideally, all Ingress controllers should fit the reference specification. In reality, the various

Ingress controllers operate slightly differently. Make sure you review your Ingress controller’s

documentation to understand the specifics of how it works.

ingress-nginx is a popular Ingress controller,

so we will use it in this tutorial:

helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace

Wait for the ingress controller to be ready:

kubectl wait --namespace ingress-nginx \

--for=condition=ready pod \

--selector=app.kubernetes.io/component=controller \

--timeout=120s

Check the Ingress controller’s external IP address

If your Kubernetes cluster is a “real” cluster that supports services of type LoadBalancer, it

will have allocated an external IP address or FQDN to the ingress controller.

Check the IP address or FQDN with the following command:

kubectl get service ingress-nginx-controller --namespace=ingress-nginx

It will be the EXTERNAL-IP field. If that field shows <pending>, this means that your Kubernetes

cluster wasn’t able to provision the load balancer. Generally, this is because it doesn’t support

services of type LoadBalancer.

Once you have the external IP address (or FQDN), set up a DNS record pointing to it. Refer to your

DNS provider’s documentation on how to add a new DNS record to your domain.

You will want to create an A record that points to the external IP address. If your external IP

address is <EXTERNAL-IP>, you would create a record like this:

A myapp.spinkube.local <EXTERNAL-IP>

Once you’ve added a DNS record to your domain and it has propagated, proceed to create an ingress

resource.

Create an Ingress resource

Create an Ingress resource that routes traffic to the simple-spinapp service. The following

example assumes that you have set up a DNS record for myapp.spinkube.local:

kubectl create ingress simple-spinapp --class=nginx --rule="myapp.spinkube.local/*=simple-spinapp:80"

A couple notes about the above command:

simple-spinapp is the name of the Ingress resource.myapp.spinkube.local is the hostname that the Ingress will route traffic to. This is the DNS

record you set up earlier.simple-spinapp:80 is the Service that SpinKube created for us. The application listens for

requests on port 80.

Assuming DNS has propagated correctly, you should see a message like “Hello world from Spin!” when

you connect to http://myapp.spinkube.local/.

Congratulations, you are serving a public website hosted on a Kubernetes cluster! 🎉

Connecting with kubectl port-forward

This is a quick way to test your Ingress setup without setting up DNS records or on clusters without

support for services of type LoadBalancer.

Open a new terminal and forward a port from localhost port 8080 to the Ingress controller:

kubectl port-forward --namespace=ingress-nginx service/ingress-nginx-controller 8080:80

Then, in another terminal, test the Ingress setup:

curl --resolve myapp.spinkube.local:8080:127.0.0.1 http://myapp.spinkube.local:8080/hello

You should see a message like “Hello world from Spin!”.

If you want to see your app running in the browser, update your /etc/hosts file to resolve

requests from myapp.spinkube.local to the ingress controller:

127.0.0.1 myapp.spinkube.local

3.7 - Monitoring your app

How to view telemetry data from your Spin apps running in SpinKube.

This topic guide shows you how to configure SpinKube so your Spin apps export observability data. This data will export to an OpenTelemetry collector which will send it to Jaeger.

Prerequisites

Please ensure you have the following tools installed before continuing:

About OpenTelemetry Collector

From the OpenTelemetry documentation:

The OpenTelemetry Collector offers a vendor-agnostic implementation of how to receive, process and export telemetry data. It removes the need to run, operate, and maintain multiple agents/collectors. This works with improved scalability and supports open source observability data formats (e.g. Jaeger, Prometheus, Fluent Bit, etc.) sending to one or more open source or commercial backends.

In our case, the OpenTelemetry collector serves as a single endpoint to receive and route telemetry data, letting us to monitor metrics, traces, and logs via our preferred UIs.

About Jaeger

From the Jaeger documentation:

Jaeger is a distributed tracing platform released as open source by Uber Technologies. With Jaeger you can: Monitor and troubleshoot distributed workflows, Identify performance bottlenecks, Track down root causes, Analyze service dependencies

Here, we have the OpenTelemetry collector send the trace data to Jaeger.

Deploy OpenTelemetry Collector

First, add the OpenTelemetry collector Helm repository:

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm repo update

Next, deploy the OpenTelemetry collector to your cluster:

helm upgrade --install otel-collector open-telemetry/opentelemetry-collector \

--set image.repository="otel/opentelemetry-collector-k8s" \

--set nameOverride=otel-collector \

--set mode=deployment \

--set config.exporters.otlp.endpoint=http://jaeger-collector.default.svc.cluster.local:4317 \

--set config.exporters.otlp.tls.insecure=true \

--set config.service.pipelines.traces.exporters\[0\]=otlp \

--set config.service.pipelines.traces.processors\[0\]=batch \

--set config.service.pipelines.traces.receivers\[0\]=otlp \

--set config.service.pipelines.traces.receivers\[1\]=jaeger

Deploy Jaeger

Next, add the Jaeger Helm repository:

helm repo add jaegertracing https://jaegertracing.github.io/helm-charts

helm repo update

Then, deploy Jaeger to your cluster:

helm upgrade --install jaeger jaegertracing/jaeger \

--set provisionDataStore.cassandra=false \

--set allInOne.enabled=true \

--set agent.enabled=false \

--set collector.enabled=false \

--set query.enabled=false \

--set storage.type=memory

The SpinAppExecutor resource determines how Spin applications are deployed in the cluster. The following configuration will ensure that any SpinApp resource using this executor will send telemetry data to the OpenTelemetry collector. To see a comprehensive list of OTel options for the SpinAppExecutor, see the API reference.

Create a file called executor.yaml with the following content:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinAppExecutor

metadata:

name: otel-shim-executor

spec:

createDeployment: true

deploymentConfig:

runtimeClassName: wasmtime-spin-v2

installDefaultCACerts: true

otel:

exporter_otlp_endpoint: http://otel-collector.default.svc.cluster.local:4318

To deploy the executor, run:

kubectl apply -f executor.yaml

Deploy a Spin app to observe

With everything in place, we can now deploy a SpinApp resource that uses the executor otel-shim-executor.

Create a file called app.yaml with the following content:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: otel-spinapp

spec:

image: ghcr.io/spinkube/spin-operator/cpu-load-gen:20240311-163328-g1121986

executor: otel-shim-executor

replicas: 1

Deploy the app by running:

kubectl apply -f app.yaml

Congratulations! You now have a Spin app exporting telemetry data.

Next, we need to generate telemetry data for the Spin app to export. Use the below command to port-forward the Spin app:

kubectl port-forward svc/otel-spinapp 3000:80

In a new terminal window, execute a curl request:

The request will take a couple of moments to run, but once it’s done, you should see an output similar to this:

fib(43) = 433494437

Interact with Jaeger

To view the traces in Jaeger, use the following port-forward command:

kubectl port-forward svc/jaeger-query 16686:16686

Then, open your browser and navigate to localhost:16686 to interact with Jaeger’s UI.

3.8 - Using a key value store

Connect your Spin App to a key value store

Spin applications can utilize a standardized API for persisting data in a key value

store. The default key value store in

Spin is an SQLite database, which is great for quickly utilizing non-relational local storage

without any infrastructure set-up. However, this solution may not be preferable for an app running

in the context of SpinKube, where apps are often scaled beyond just one replica.

Thankfully, Spin supports configuring an application with an external key value

provider.

External providers include Redis or Valkey and Azure

Cosmos DB.

Prerequisites

To follow along with this tutorial, you’ll need:

Build and publish the Spin application

For this tutorial, we’ll use a Spin key/value

application written with the

Go SDK. The application serves a CRUD (Create, Read, Update, Delete) API for managing key/value

pairs.

First, clone the repository locally and navigate to the examples/key-value directory:

git clone git@github.com:fermyon/spin-go-sdk.git

cd examples/key-value

Now, build and push the application to a registry you have access to. Here we’ll use

ttl.sh:

export IMAGE_NAME=ttl.sh/$(uuidgen):1h

spin build

spin registry push ${IMAGE_NAME}

Since we have access to a Kubernetes cluster already running SpinKube, we’ll choose

Valkey for our key value provider and install this provider via Bitnami’s

Valkey Helm chart. Valkey is swappable

for Redis in Spin, though note we do need to supply a URL using the redis:// protocol rather than

valkey://.

helm install valkey --namespace valkey --create-namespace oci://registry-1.docker.io/bitnamicharts/valkey

As mentioned in the notes shown after successful installation, be sure to capture the valkey

password for use later:

export VALKEY_PASSWORD=$(kubectl get secret --namespace valkey valkey -o jsonpath="{.data.valkey-password}" | base64 -d)

Create a Kubernetes Secret for the Valkey URL

The runtime configuration will require the Valkey URL so that it can connect to this provider. As

this URL contains the sensitive password string, we will create it as a Secret resource in

Kubernetes:

kubectl create secret generic kv-secret --from-literal=valkey-url="redis://:${VALKEY_PASSWORD}@valkey-master.valkey.svc.cluster.local:6379"

Prepare the SpinApp manifest

You’re now ready to assemble the SpinApp custom resource manifest for this application.

- All of the key value config is set under

spec.runtimeConfig.keyValueStores. See the

keyValueStores reference guide for more details. - Here we configure the

default store to use the redis provider type and under options supply

the Valkey URL (via its Kubernetes secret)

Plug the $IMAGE_NAME and $DB_URL values into the manifest below and save as spinapp.yaml:

apiVersion: core.spinkube.dev/v1alpha1

kind: SpinApp

metadata:

name: kv-app

spec:

image: "$IMAGE_NAME"

replicas: 1

executor: containerd-shim-spin

runtimeConfig:

keyValueStores:

- name: "default"

type: "redis"

options:

- name: "url"

valueFrom:

secretKeyRef:

name: "kv-secret"

key: "valkey-url"

Create the SpinApp

Apply the resource manifest to your Kubernetes cluster:

kubectl apply -f spinapp.yaml

The Spin Operator will handle the creation of the underlying Kubernetes resources on your behalf.

Test the application

Now you are ready to test the application and verify connectivity and key value storage to the

configured provider.

Configure port forwarding from your local machine to the corresponding Kubernetes Service:

kubectl port-forward services/kv-app 8080:80